Designing context-aware identity verification for online exams

Designing context-aware identity verification for online exams

Improving candidate transparency and proctor decision-making at scale

Improving candidate transparency and proctor decision-making at scale

Due to NDA restrictions, product names are anonymized and screens are reskinned.

Due to NDA restrictions, product names are anonymized and screens are reskinned.

OVERVIEW

Online exams rely on identity verification to establish trust at scale. However, discrepancies between candidate data and scanned documents often require manual review, creating friction for both candidates and proctors. Candidates receive little explanation when verification fails, while proctors lack sufficient context to make efficient, confident decisions.

This project reimagined identity verification as a context-aware workflow, improving transparency for candidates and accountability for proctors while reducing unnecessary manual effort.

Online exams rely on identity verification to establish trust at scale. However, discrepancies between candidate data and scanned documents often require manual review, creating friction for both candidates and proctors. Candidates receive little explanation when verification fails, while proctors lack sufficient context to make efficient, confident decisions.

This project reimagined identity verification as a context-aware workflow, improving transparency for candidates and accountability for proctors while reducing unnecessary manual effort.

Role

UI/UX Designer

UI/UX Designer

Team

Product Manager

Product Manager

Design Team

Design Team

AI/Engineering Team

AI/Engineering Team

Skills

Product Thinking, User Research, Info Architecture, Interaction Design, UX Writing, Cross-Functional Collaboration, Systems Thinking

Product Thinking, User Research, Info Architecture, Interaction Design, UX Writing, Cross-Functional Collaboration, Systems Thinking

Timeline

April 2023 - June 2023 (3 months)

April 2023 - June 2023 (3 months)

OUTCOME

Reduced verification time by 50%

Reduced verification time by 50%

through clearer inputs and fewer escalations

through clearer inputs and fewer escalations

Increased proctor efficiency by 30%

Increased proctor efficiency by 30%

by preserving context across reviews

by preserving context across reviews

Improved transparency and user trust

Improved transparency and user trust

during high-stress verification moments

during high-stress verification moments

CONTEXT

Assessmate is a suite of AI-powered products used to build, conduct, proctor, and grade online assessments at scale. Before starting an assessment, students were required to complete the following process:

Assessmate is a suite of AI-powered products used to build, conduct, proctor, and grade online assessments at scale. Before starting an assessment, students were required to complete the following process:

Candidates completed a face scan using their webcam, followed by an ID card check. During early pilots, proctors manually reviewed this data and flagged anomalies when discrepancies were detected against registration details.

Candidates completed a face scan using their webcam, followed by an ID card check. During early pilots, proctors manually reviewed this data and flagged anomalies when discrepancies were detected against registration details.

THE PROBLEM

Manual identity verification created friction on both sides of the system.

Manual identity verification created friction on both sides of the system.

Proctors lacked standardized tools to understand why a verification failed, forcing them to make decisions with limited context and increasing review time and inconsistency. Candidates, meanwhile, received generic error messages with no explanation or guidance, often leading to repeated failed attempts and unnecessary support escalations - especially in legitimate edge cases such as name mismatches.

Proctors lacked standardized tools to understand why a verification failed, forcing them to make decisions with limited context and increasing review time and inconsistency. Candidates, meanwhile, received generic error messages with no explanation or guidance, often leading to repeated failed attempts and unnecessary support escalations - especially in legitimate edge cases such as name mismatches.

Problem Statement

How might we reduce manual identity verification effort for proctors while preserving human oversight and improving transparency for candidates?

RESEARCH METHODS

Candidate Surveys

Candidate Surveys

To understand where candidates experienced friction during identity verification, I designed a post-test survey that received 70+ responses. The survey included scaled questions across key stages of the test flow, along with an open-ended question to capture unstructured feedback.

This research surfaced recurring issues around unclear failure feedback, repeated retries without guidance, and a lack of transparency when verification did not succeed - particularly in legitimate edge cases such as name mismatches or document variations.

To understand where candidates experienced friction during identity verification, I designed a post-test survey that received 70+ responses. The survey included scaled questions across key stages of the test flow, along with an open-ended question to capture unstructured feedback.

This research surfaced recurring issues around unclear failure feedback, repeated retries without guidance, and a lack of transparency when verification did not succeed - particularly in legitimate edge cases such as name mismatches or document variations.

Proctor Interviews

Proctor Interviews

I conducted interviews with 8 proctors who participated in the pilot verification process. Discussions focused on how they reviewed failed verifications, what information they relied on to make decisions, and where breakdowns occurred in their workflow.

Proctors consistently described needing to make judgment calls with limited context, often reconstructing failure reasons manually or relying on assumptions when candidate intent or system behavior was unclear.

I conducted interviews with 8 proctors who participated in the pilot verification process. Discussions focused on how they reviewed failed verifications, what information they relied on to make decisions, and where breakdowns occurred in their workflow.

Proctors consistently described needing to make judgment calls with limited context, often reconstructing failure reasons manually or relying on assumptions when candidate intent or system behavior was unclear.

Key Insights

Unclear verification feedback led to confusion and repeated retries.

Candidates often did not understand why verification failed, resulting in unnecessary retries and increased stress, even in legitimate edge cases.

Candidates often did not understand why verification failed, resulting in unnecessary retries and increased stress, even in legitimate edge cases.

Edge cases were frequent but poorly supported.

Name mismatches, document variations, and environmental factors lacked structured handling, forcing workarounds for both candidates and proctors.

Name mismatches, document variations, and environmental factors lacked structured handling, forcing workarounds for both candidates and proctors.

Manual verification increased inconsistency and cognitive load.

Without standardized context or supporting explanations, proctors relied on personal judgment, slowing reviews and reducing confidence in decisions.

Without standardized context or supporting explanations, proctors relied on personal judgment, slowing reviews and reducing confidence in decisions.

Competitive Analysis

Competitive Analysis

Competitive Analysis

To understand how other platforms approached similar challenges, I analyzed leading online proctoring solutions including Mettl, Proctorio, and ProctorU, focusing on how they supported verification failures, edge cases, and reviewer decision-making.

Rather than feature parity, this analysis focused on how systems balanced scale, clarity, and human judgment.

To understand how other platforms approached similar challenges, I analyzed leading online proctoring solutions including Mettl, Proctorio, and ProctorU, focusing on how they supported verification failures, edge cases, and reviewer decision-making.

Rather than feature parity, this analysis focused on how systems balanced scale, clarity, and human judgment.

No platform fully balances clarity, scale, and accuracy

Manual systems allow flexibility, while automated systems improve efficiency, but most fail to clearly explain verification outcomes to candidates.

No platform fully balances clarity, scale, and accuracy

Manual systems allow flexibility, while automated systems improve efficiency, but most fail to clearly explain verification outcomes to candidates.

Proctor confidence depends on visibility, not just control

Proctor confidence depends on visibility, not just control

Human reviewers performed better when comparison data and failure reasons were clearly surfaced, not when decisions were simply gated.

Human reviewers performed better when comparison data and failure reasons were clearly surfaced, not when decisions were simply gated.

Edge cases expose the limits of both extremes

Systems that lack contextual handling struggle with legitimate variations, often increasing false flags or support escalations.

Edge cases expose the limits of both extremes

Systems that lack contextual handling struggle with legitimate variations, often increasing false flags or support escalations.

Scalability often comes at the cost of communication

As platforms optimize for volume, candidate-facing explanations and recovery paths tend to degrade.

Scalability often comes at the cost of communication

As platforms optimize for volume, candidate-facing explanations and recovery paths tend to degrade.

Rather than identifying a clear best practice, the competitive landscape reinforced the need for a system that preserves context across stakeholders while reducing unnecessary manual effort.

Rather than identifying a clear best practice, the competitive landscape reinforced the need for a system that preserves context across stakeholders while reducing unnecessary manual effort.

EXPLORATIONS

Collaboration with AI Developers

Collaboration with AI Developers

Designing a more context-aware verification workflow required close collaboration with AI and backend engineers to understand technical constraints and opportunities. Together, we explored how system outputs could better support human decision-making rather than replace it.

Designing a more context-aware verification workflow required close collaboration with AI and backend engineers to understand technical constraints and opportunities. Together, we explored how system outputs could better support human decision-making rather than replace it.

Key areas of exploration included:

Key areas of exploration included:

Optical Character Recognition (OCR)-based ID validation to extract and compare text fields against registration data.

Facial similarity thresholds to distinguish between minor discrepancies and meaningful mismatches.

Confidence-based flagging, replacing binary pass/fail outcomes with graded signals that better support human review.

Optical Character Recognition (OCR)-based ID validation to extract and compare text fields against registration data.

Facial similarity thresholds to distinguish between minor discrepancies and meaningful mismatches.

Confidence-based flagging, replacing binary pass/fail outcomes with graded signals that better support human review.

These explorations directly informed how verification results, alerts, and comparison data were communicated to both candidates and proctors.

These explorations directly informed how verification results, alerts, and comparison data were communicated to both candidates and proctors.

Usability Testing and Iteration

Usability Testing and Iteration

To validate the redesigned workflows, I conducted usability testing sessions with proctors using early prototypes of the verification interface. Sessions focused on how quickly reviewers could understand failure context, assess candidate explanations, and make confident decisions.

To validate the redesigned workflows, I conducted usability testing sessions with proctors using early prototypes of the verification interface. Sessions focused on how quickly reviewers could understand failure context, assess candidate explanations, and make confident decisions.

Insights from testing informed iterations around information hierarchy, language clarity, and the placement of candidate and proctor notes to reduce cognitive load and improve accountability.

Insights from testing informed iterations around information hierarchy, language clarity, and the placement of candidate and proctor notes to reduce cognitive load and improve accountability.

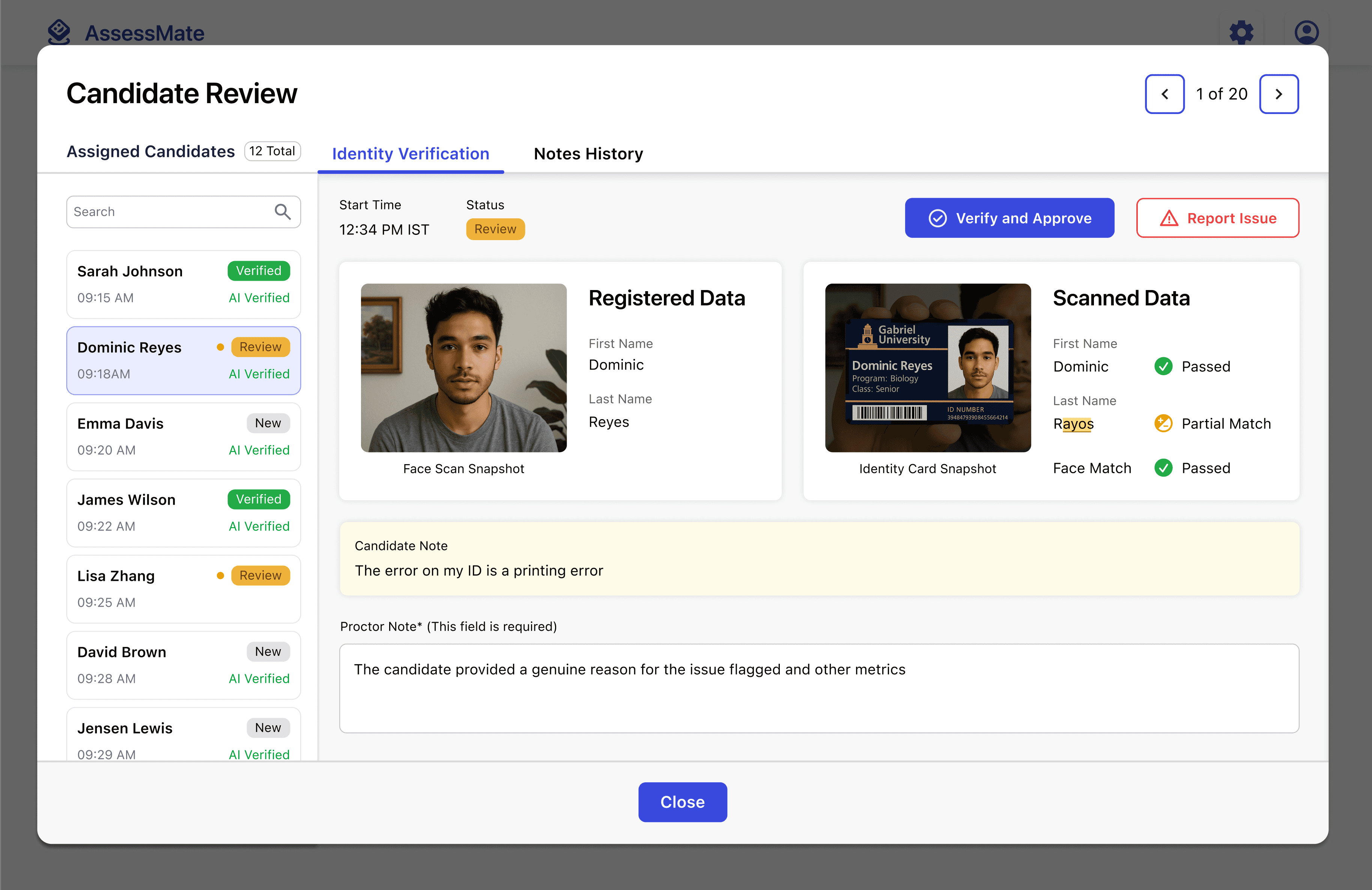

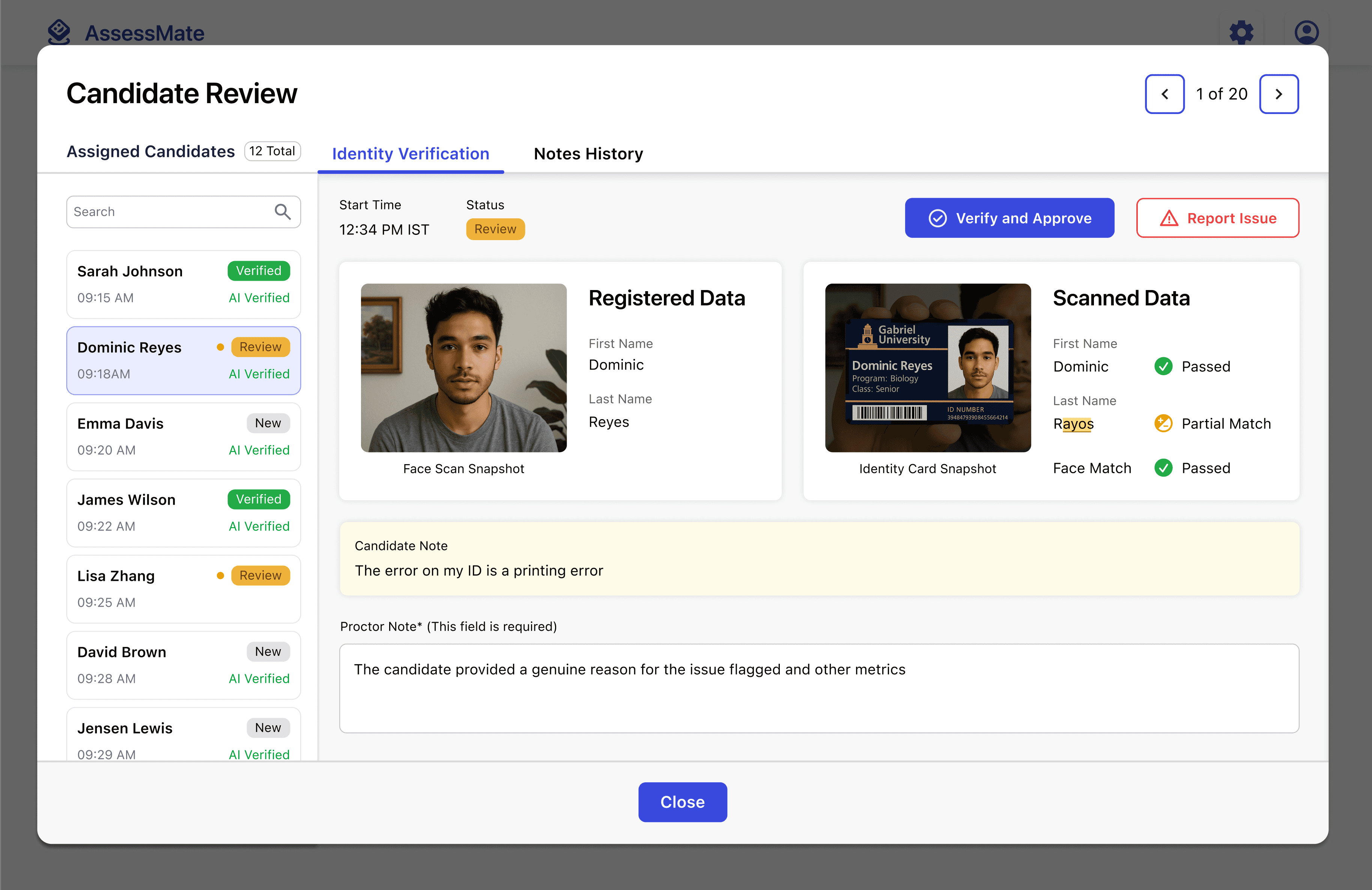

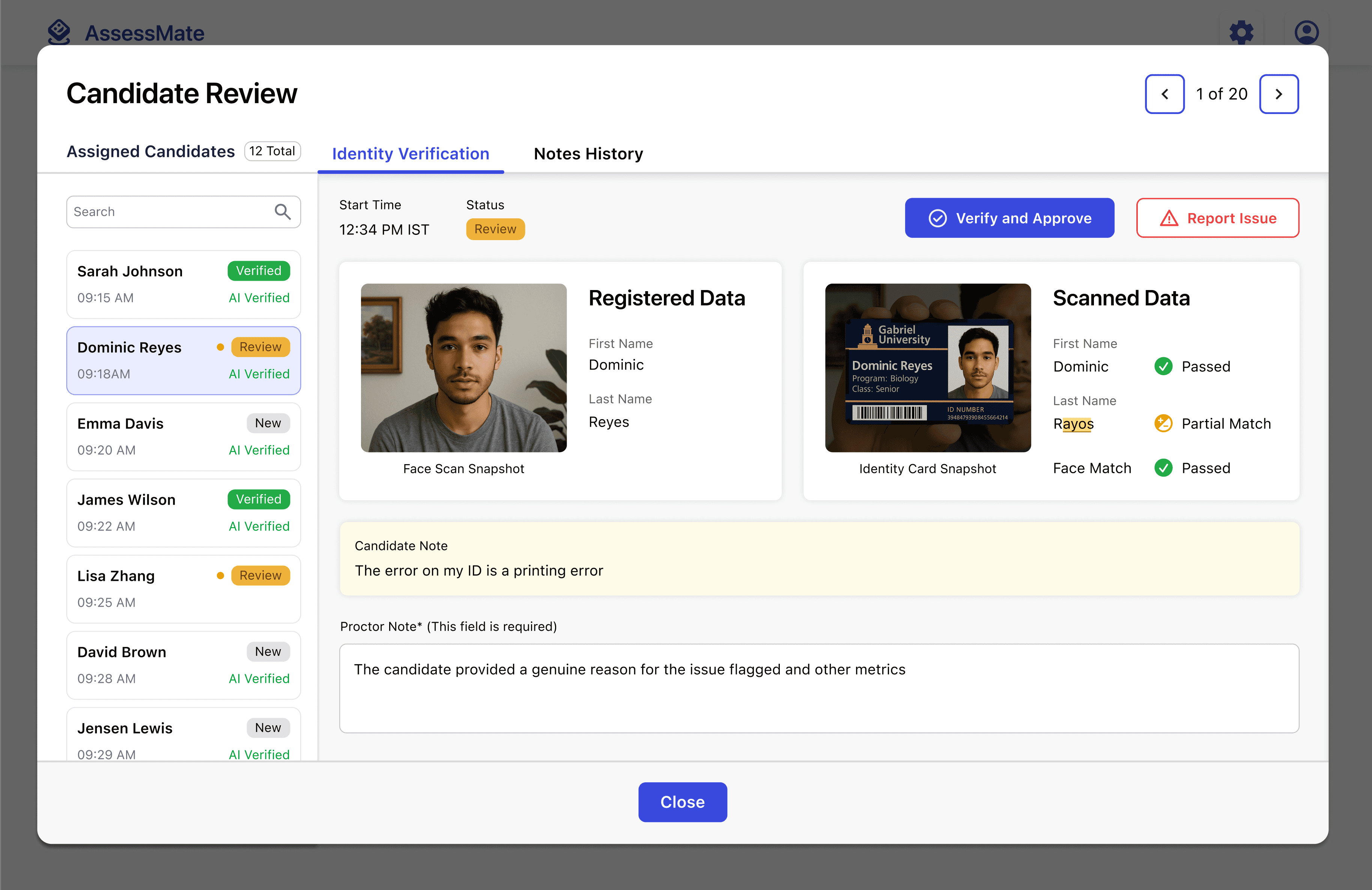

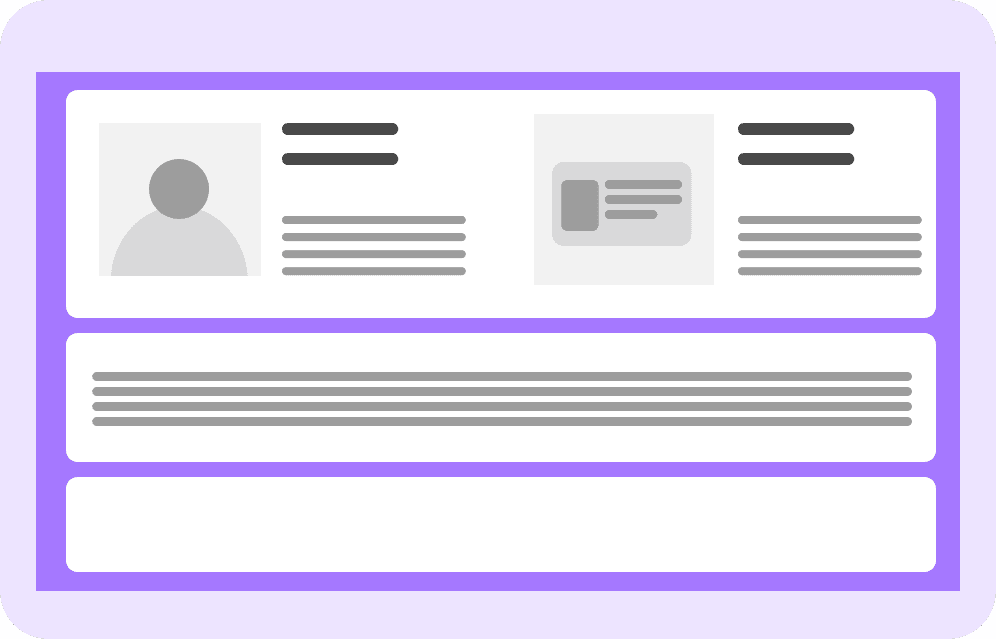

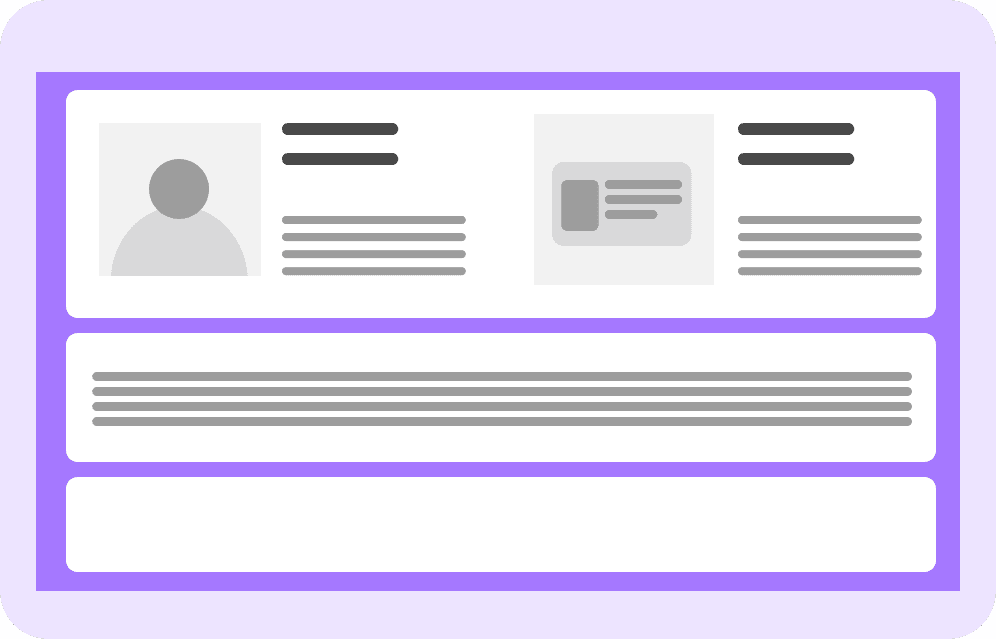

Layout variations for the proposed new proctor dashboard

Proctors preferred this layout as it allows direct comparisons between the registered and scanned data

Proctors preferred this layout as it allows direct comparisons between the registered and scanned data

DESIGN DECISIONS

End-to-End Verification Flow

End-to-End Verification Flow

This walkthrough shows the redesigned identity verification experience from the candidate’s initial scan through proctor review and final decision, highlighting how context and accountability are preserved across the flow.

This walkthrough shows the redesigned identity verification experience from the candidate’s initial scan through proctor review and final decision, highlighting how context and accountability are preserved across the flow.

Rather than treating verification as a single system decision, the workflow was redesigned as a sequence of human handoffs. Candidates could provide context, proctors reviewed with visibility into failure reasons, and decisions were documented to ensure accountability.

Rather than treating verification as a single system decision, the workflow was redesigned as a sequence of human handoffs. Candidates could provide context, proctors reviewed with visibility into failure reasons, and decisions were documented to ensure accountability.

Candidate Experience

Candidate Experience

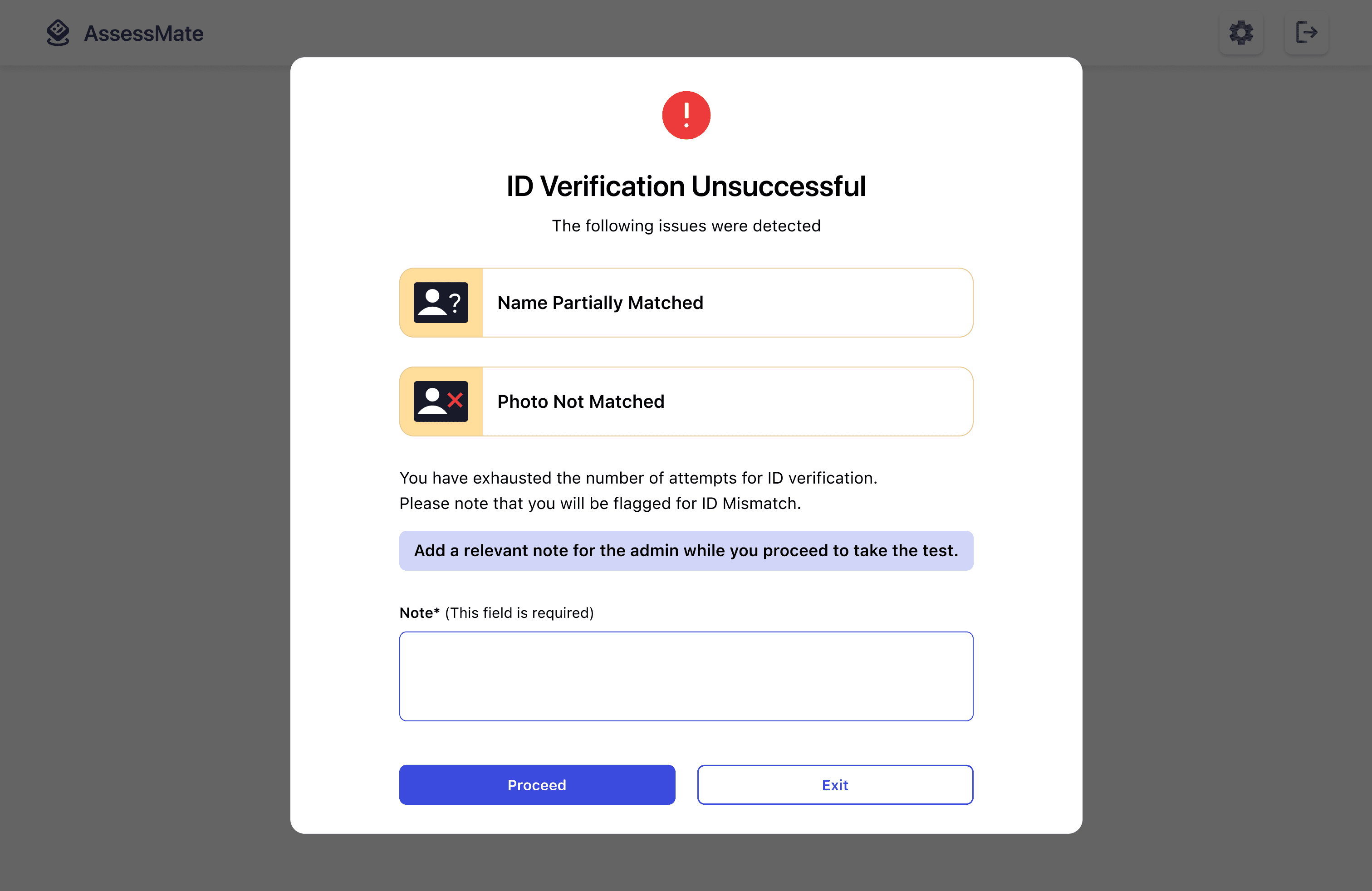

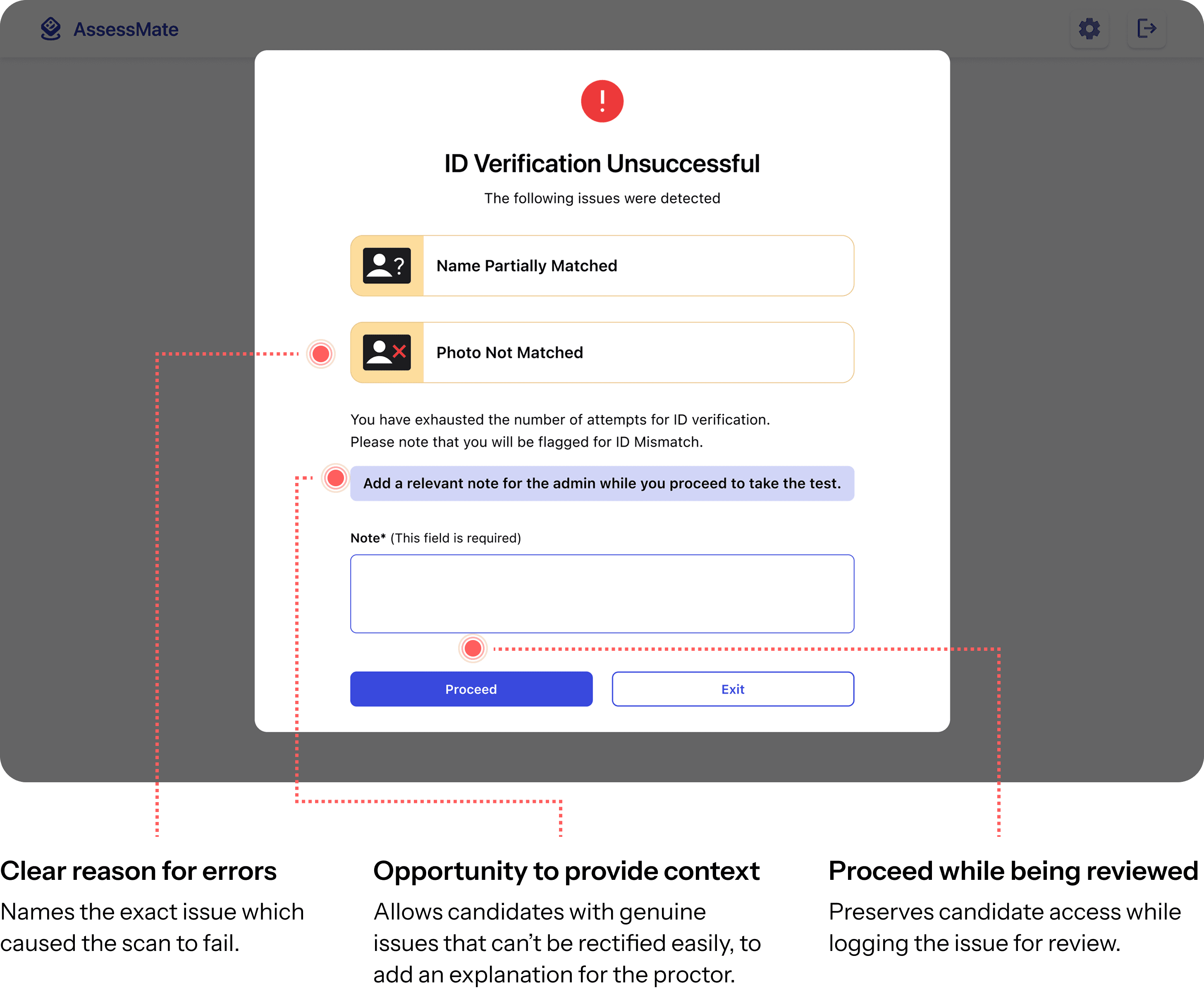

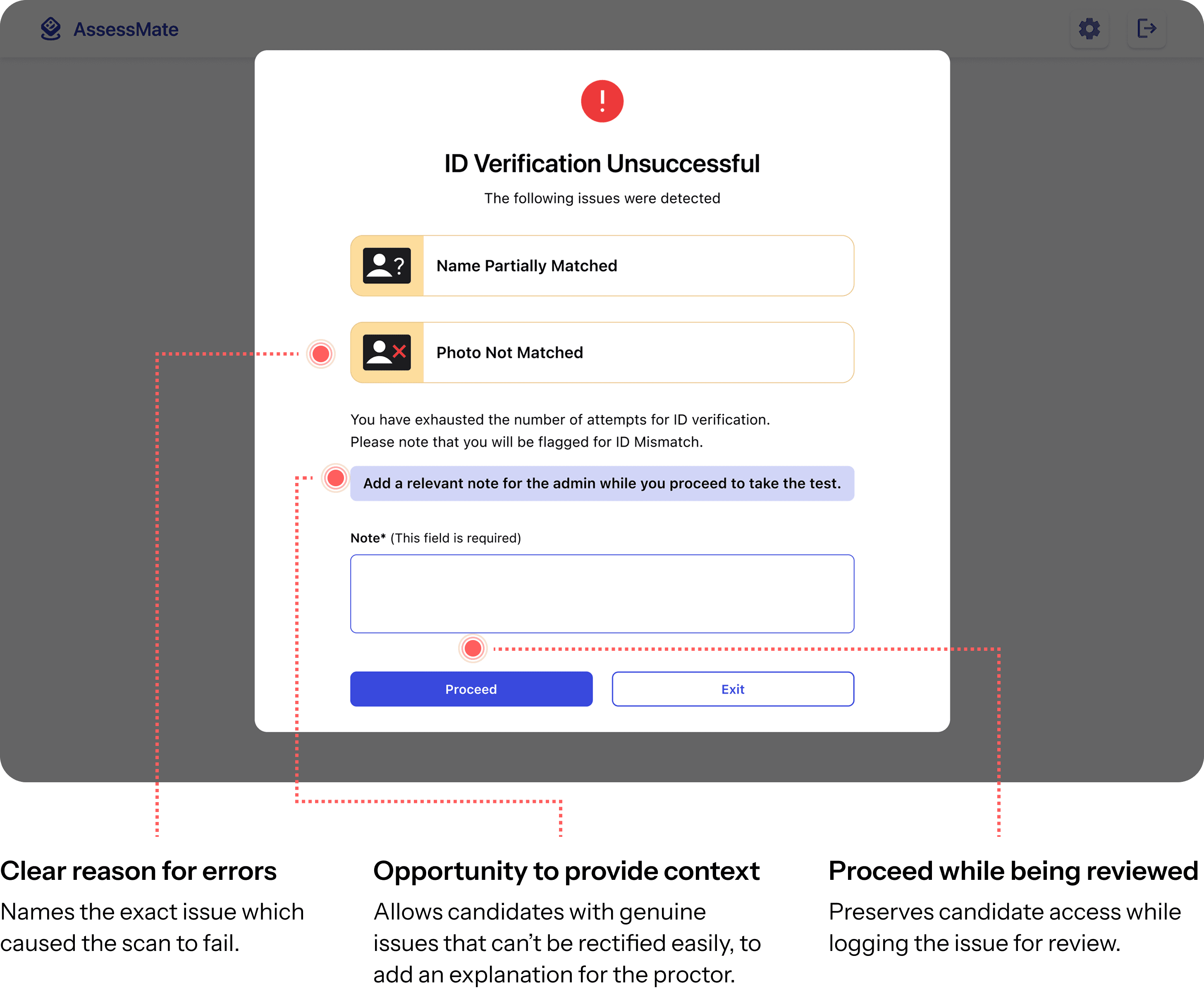

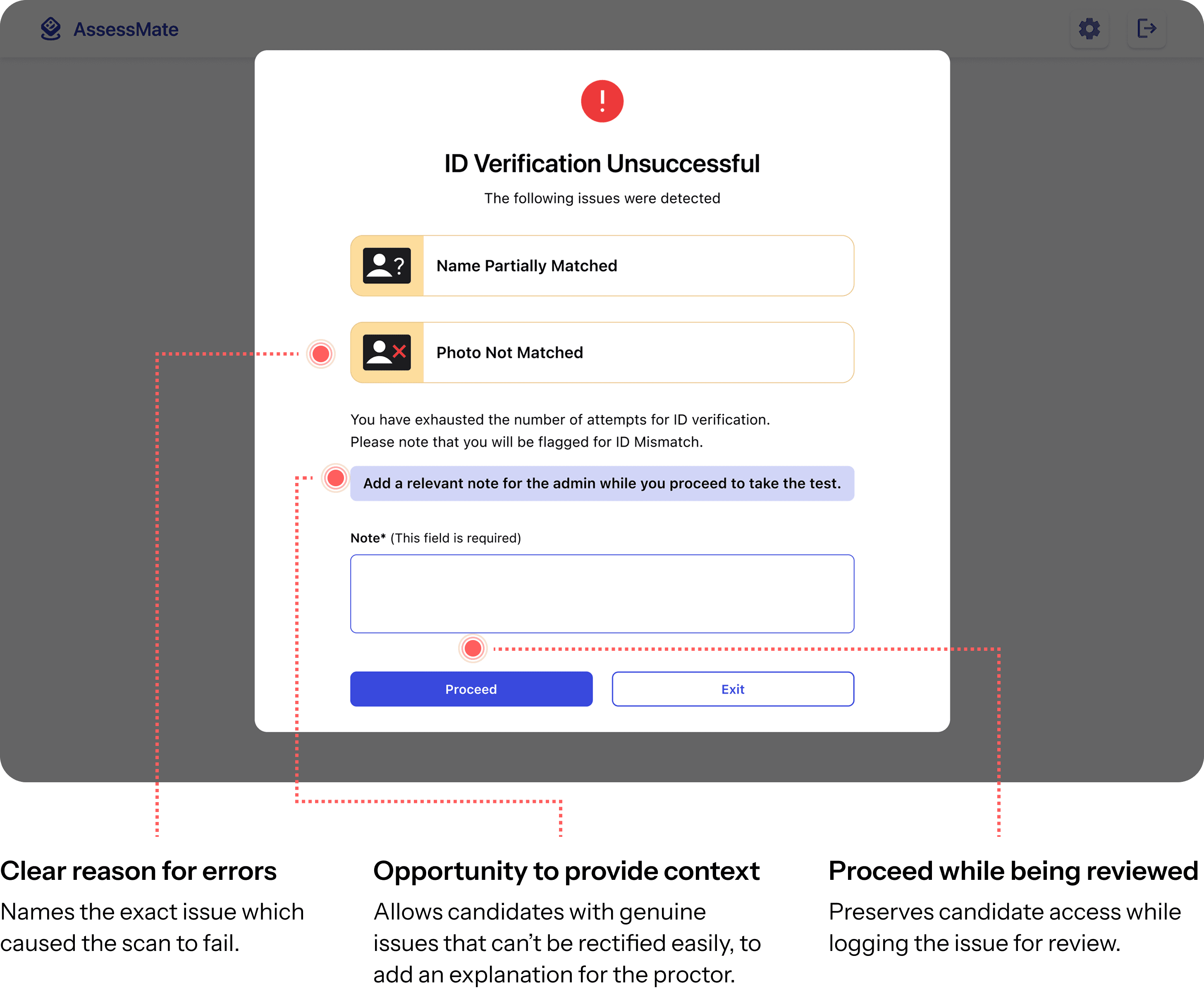

Transparent error messages

Transparent error messages

After the candidate attempts the ID scan, if there is an error the candidate is shown a specific error message that helps them understand how they can correct their issue and retry successfully. The instructions provided along with the graphic help candidates complete their exam set up in fewer attempts.

After the candidate attempts the ID scan, if there is an error the candidate is shown a specific error message that helps them understand how they can correct their issue and retry successfully. The instructions provided along with the graphic help candidates complete their exam set up in fewer attempts.

Clear reason for errors

Names the exact issue which caused the scan to fail.

Opportunity to provide context

Allows candidates with genuine issues that can’t be rectified easily, to add an explanation for the proctor.

Proceed while being reviewed

Preserves candidate access while logging the issue for review

Proctor Experience

Prioritized verification with clear comparisons

Prioritized verification with clear comparisons

Each candidate is assigned a visible verification state (AI Verified, Review, New), allowing proctors to quickly focus on cases that need attention. Registered data and extracted ID data are displayed side by side with clear labels and match indicators, making discrepancies immediately scannable and reducing manual cross-checking.

Each candidate is assigned a visible verification state (AI Verified, Review, New), allowing proctors to quickly focus on cases that need attention. Registered data and extracted ID data are displayed side by side with clear labels and match indicators, making discrepancies immediately scannable and reducing manual cross-checking.

Verification states for candidates

Proctors can focus on candidates that have been flagged for review.

Side-by-side comparison reduces mental effort

Proctors save time and cognitive energy by comparing data at familiar locations.

Candidate context informs human judgment

Proctors can refer to the Candidate Note while writing a comment, thus taking accountability for their decision.

Final call remains with the proctor

Proctors can make the right call themselves, avoiding errors in edge cases.

REFLECTIONS

Hybrid AI + human verification builds trust at scale

Hybrid AI + human verification builds trust at scale

I learned that automation is most effective when it reduces routine effort but still preserves human judgment for ambiguous cases, allowing the system to scale without undermining trust.

I learned that automation is most effective when it reduces routine effort but still preserves human judgment for ambiguous cases, allowing the system to scale without undermining trust.

Transparent error messaging is as important as accuracy

Transparent error messaging is as important as accuracy

Clear, semantically accurate feedback helped candidates understand verification outcomes, reducing frustration and repeated attempts even when the system could not immediately approve them.

Clear, semantically accurate feedback helped candidates understand verification outcomes, reducing frustration and repeated attempts even when the system could not immediately approve them.

Structured tools lead to more consistent decision-making

Structured tools lead to more consistent decision-making

Providing proctors with labeled data, side-by-side comparisons, and required decision notes reduced subjectivity and improved confidence in verification outcomes across reviewers.

Providing proctors with labeled data, side-by-side comparisons, and required decision notes reduced subjectivity and improved confidence in verification outcomes across reviewers.

Overall, this project reinforced that trust isn’t built by stricter systems, but by clearer conversations between people :)

Overall, this project reinforced that trust isn’t built by stricter systems, but by clearer conversations between people :)

Next Case

Next Case

Automotive Design

Design Systems

Navigating unfamiliar roads safely with Blue Guardian

Navigating unfamiliar roads safely with Blue Guardian

Designing AI-powered accessible navigation for Hyundai America vehicles in winter conditions.

Designing AI-powered accessible navigation for Hyundai America vehicles in winter conditions.

Since you’ve reached this far — Let’s Connect!

Reuben Crasto © 2025

Designed with intention, powered by protein shakes

Since you’ve reached this far — Let’s Connect!

Reuben Crasto © 2025

Designed with intention, powered by protein shakes

Since you’ve reached this far — Let’s Connect!

Reuben Crasto © 2025

Designed with intention, powered by protein shakes